It became very clear to me after several months with my latest customer that they were going to need a method for provisioning Exchange objects that didn't involve granting rights and distributing the Exchange Management Console to their global IT workforce. The customer was replacing GroupWise 6.x with Exchange 2007 and wanted to take the opportunity to hem-in and centralize the widely distributed server and administrative footprint that is so common to GroupWise and NDS. The proposed Exchange architecture centralized all servers to a central data center, but there was still discussion on the best way to distribute administrative tasks.

In centralizing Exchange, my customer needed a mechanism in which request for adds, changes, and deletes could be made to a centralized provisioning team who would then perform the tasks on the production system. Of course there are several ways to do this. We could introduce a new manual process or leverage the customer's existing ticketing system....or we could experiment a little do something simple and elegant.

Now it should be known that I'm not a dev-guy. I'm a wannabe. A poser! The reality is that I'm an infrastructure guy that can script. But, I'm always up for a challenge and always looking for opportunities to build "real" dev skills. As I looked at this problem, I saw an opportunity for a poser to develop a simple solution using PowerShell, ASP.NET, and C#.

As all Exchange aficionados know PowerShell rocks!!!! In my opinion, next to x64, it is the innovation in Exchange 2007. It is the foundation on which the solution was built. But PowerShell could not front-end a simple solution, nor could it be distributed easily. Wrapping PowerShell into a Win32/64 app using C# could simplify the end-user experience, but the distribution and updating of that type of application is burdensome. The last possibility was to wrap PowerShell into a web-based application. An easy decision, but one also fraught with pitfalls. It is my hope that this blog will help others maneuver around those pitfalls.

Working with my good friend and colleague, Chad Gau (formerly of EMC, now with Statera), we designed a solution that was broken up into two distinct applications that derived off the same code base. The first application developed was the provisioning application. We titled this application ExOM (Exchange Object Management). The second application was the requesting application which we titled ExReq (Exchange Requests). As noted, these application were derived using the same code base yet there is a fundamental difference; ExOM has PowerShell wrapped up and executes directly against Exchange and Active Directory while ExReq simply takes the input and packages it off via email to the customers ticketing generating system where the request is routed appropriately.

What is common between ExOM and ExReq is the User Interface that is based on a hierarchy of options available for each Exchange object type. I've characterized the hierarchy below:

User Interface

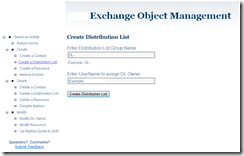

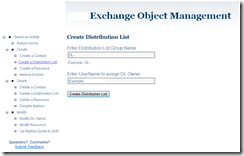

The UI for ExOM and ExReq was developed using ASP.NET 3.5 with ASP.NET AJAX Control Toolkit. I used the Accordion control on the default.aspx page to pass along information on the various modules and maintain a change control log. I used a Master Page to wrap the entire application and used the TreeView control for navigation control.

Subsequent pages look similar to the Create Distribution List page. This page and several others have some interesting features, including leveraging the AJAX Auto-Complete control that is tied to a Web Service that queries Active Directory and .NET validation rules that check against Active Directory to ensure uniqueness.

Active Directory Web Service

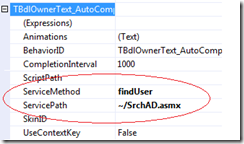

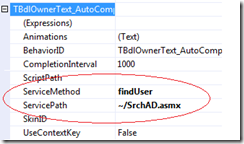

I created a Web Service that could be tied to the AJAX Auto-CompleteExtender. I used this control extender extensively for any textbox that required a match to a valid object in the Active Directory. I used this technique to reduce the number of errors that would otherwise be generated by mistyped or non-existent entities. This feature also greatly simplified the user experience. By extending a standard textbox with the AJAX extender and then associating the web service file service path and method, as seen in the example from the properties of the textbox below, a user can now simply type in the first 3 characters of the target Active Directory value and the web service automatically returns a list of matches directory from AD. As the user continues to type in additional matching characters, the list narrows.

The web service code uses the System.DirectoryServices.ActiveDirectory and, of course, the System.Web.Services .NET libraries. Within the code several things are happening.

Note: When you create a new .ASMX web service page in Visual Studio, the following line must be un-commented in order to run this code against an AJAX extension:

[System.Web.Script.Services.ScriptService]

First, I wrote a method to bind to the root directory service entries:

private string domainDNC

{

get

{

Domain dom = System.DirectoryServices.ActiveDirectory.Domain.GetCurrentDomain();

DirectoryEntry rootDSE = new DirectoryEntry("LDAP://" + dom.Name + "/rootDSE");

string domainDNC = (string)rootDSE.Properties["defaultNamingContext"][0];

return domainDNC;

}

}

Next, the directory path, authentication type, and search object are set:

DirectoryEntry de = new DirectoryEntry();

de.Path = "LDAP://ou=xyz," + domainDNC;

de.AuthenticationType = AuthenticationTypes.Secure;

DirectorySearcher deSearch = new DirectorySearcher();

deSearch.SearchRoot = de;

I identify the properties that I want to pull:

deSearch.PropertiesToLoad.Add("displayName");

deSearch.PropertiesToLoad.Add("cn");

deSearch.ClientTimeout = TimeSpan.FromSeconds(30);

deSearch.SizeLimit = 100;I determine the sort order of the returned values:

SortOption srt;

srt = new SortOption("cn", SortDirection.Ascending);

deSearch.Sort = srt;

I create a search filter and then execute the search and return the values to a collection:

deSearch.Filter = ("(&(&(objectClass=User)(objectCategory=Person)(cn=" + prefixText.Trim() + "*)))");

SearchResultCollection results = deSearch.FindAll();

And last, the tricky part. I take the results and add them to a list that is returned as an array. The list then renders itself into the textbox as a drop-down list that will narrow as the user continues to type characters.

List<String> CandidateList = new List<string>();

foreach (SearchResult sr in results)

{

foreach (string Candidate in sr.Properties["cn"])

{

CandidateList.Add(Candidate);

}

}

return CandidateList.ToArray();

Wrapping PowerShell Commands and calling them from C#

There are a couple ways to instantiate PowerShell from C#. You can call a cmdlet then append parameters, line-by-line to build a string that can then be invoked inside the RunSpace. Or, you can create a variable string that has the full cmdlet and its parameter contained within and then add the variable as a script to the RunSpace and then invoke. I chose the latter method because it required less lines of code and the PowerShell cmdlet can be customized to use variables called in from the UI.

Pitfall: You MUST run PowerShell within a RunSpace that can be called from the System.Management.Automation.Runspaces library.

To host PowerShell in C#, you must include the following .NET libraries:

using System.Management.Automation.Host;

using System.Management.Automation.Runspaces;

The following example demonstrates the code used to invoke PowerShell to mailbox enable a new Exchange user. This code expects the user to exist in Active Directory.

Pitfall: Due to Active Directory replication latency, you must target a specific domain controller and use the -domaincontroller parameter on all PowerShell scripts. Doing so will ensure the object you are creating or targeting is in a proper state.

The first step is to bind to a specific domain controller. Note that I'm looking into a specific Active Directory site for the domain controller.

Domain dom = System.DirectoryServices.ActiveDirectory.Domain.GetCurrentDomain();

DirectoryContext context = new DirectoryContext(DirectoryContextType.Domain, dom.Name);

DomainController dc = DomainController.FindOne(context, "Active Directory Site");

Then I create a PowerShell .NET RunSpace to run any PowerShell cmdlets.

RunspaceConfiguration ExShell = RunspaceConfiguration.Create()

PSSnapInException snapInException = null;

PSSnapInInfo info = ExShell.AddPSSnapIn("Microsoft.Exchange.Management.Powershell.Admin", out snapInException);

Runspace ExShellRunSpace = RunspaceFactory.CreateRunspace(ExShell);

ExShellRunSpace.Open();

Next, create a Pipeline within the RunSpace for each PowerShell cmdlet. In the example below I'm creating two pipelines to handle two discrete PowerShell cmdlets within a single RunSpace.

Pitfall: A pipeline must be created for each PowerShell cmdlet you wish to run within the RunSpace.

Pipeline mbxEnableUser = ExShellRunSpace.CreatePipeline();

Pipeline mbxSetMail = ExShellRunSpace.CreatePipeline();

Create a string that contains the full cmdlet and parameters you wish to invoke. In the example below, in the first string, I'm setting the WindowsEmailAddress attribute on a user object. In the second string, I'm mail-enabling the same user on a targeted Exchange database (go here for my blog that explains the process for determining what Exchange database to apply), applying a ManagedFolderPolicy, setting a PrimarySMTPAddress, and, of course, specifying a domain controller. The variable values, tbUserName and rblEmailAddressare called from a textbox and radio button control on the web page. The dc and policy variables are assigned in the code and the mdxdb variable is called from a text file that is the output of another process that is explained here. You'll note that that in the second string,

Pitfall: If you call a variable that has spaces, like a policy, you must place quotes around it. The example below demonstrates how to do this.

string mbxMailAttrib = "Set-User " + tbUserName.text + " -WindowsEmailAddress " + rblEmailAddress.Value;

string mbxEnablestr = "Enable-Mailbox " + tbUserName.text + " -Database " + mbxdb +

" -ManagedFolderMailboxPolicy \"" + policy + "\" -ManagedFolderMailboxPolicyAllowed

-DomainController " + dc + " -PrimarySmtpAddress " + rblEmailAddress.Value;

Pass the string into the pipeline using .AddScript() and then invoke the command. When you invoke the pipeline, you are executing the PowerShell commands and returning status.

mbxSetMail.Commands.AddScript(mbxMailAttrib);

mbxSetMail.Invoke();

mbxEnableUser.Commands.AddScript(mbxEnablestr);

mbxEnableUser.Invoke();

Last, I do some error handling, passing the result back to the web page. Admissibly, my error handling could be more robust and I could decipher the error codes and pass a more friendly string, but remember, I'm not a dev-guy and isn't that a Get-Out-of-Jail card for dev wannabes?

if (mbxEnableUser.Error.Count != 0)

{

StringBuilder SB = new StringBuilder();

foreach (object item in mbxEnableUser.Error.ReadToEnd())

{

SB.AppendLine(item.ToString());

}

lblError.Text = "Error (Pipeline): " + SB.ToString();

}

Validation Techniques

I used validation techniques all over the place with this application. I did this to ensure two things; that the end-user did not type in bad data and to ensure uniqueness in the directory for various attributes. I used several ASP.NET validators, all built into Visual Studio 2008. Within the UI I used the RequiredFieldValidator, RegularExpressionValidator, and CustomValidator. While the Required and Regex validators don't require much in the way of discussion, the Custom Validator does.

The Custom Validator creates a method that is placed on the code page and will return a PageIsValid = False if the validation fails. These custom validation method can contain any code, so it was perfectly suited to my use. In the example below, I use a custom validator to determine if the name of a resource mailbox is used in the Active Directory already.

This example may look similar if you read the section onActive Directory web service above. Just like the AD web service, I get the Active Directory domain name using the standard .NET library.

private string domainDNC {

get {

Domain dom = System.DirectoryServices.ActiveDirectory.Domain.GetCurrentDomain();

DirectoryEntry rootDSE = new DirectoryEntry("LDAP://" + dom.Name + "/rootDSE");

string domainDNC = (string)rootDSE.Properties["defaultNamingContext"][0];

return domainDNC;

}

}

Next, I target a specific OU to keep the returned value cost down and authenticate securely. Note that this code is located within the Custom Validation method.

protected void ValidateResourceMBXName_ServerValidate(object source, ServerValidateEventArgs rgnamearg) {

try {

DirectoryEntry deName = new DirectoryEntry();

deName.Path = "LDAP://ou=xyz," + domainDNC;

deName.AuthenticationType = AuthenticationTypes.Secure;

Setup up the search, pass in the value you wish to search for and invoke the search.

DirectorySearcher deSearchName = new DirectorySearcher();

deSearchName.SearchRoot = deName;

deSearchName.SearchScope = SearchScope.Subtree;

deSearchName.Filter = "(cn=" + rsrcName + ")";

SearchResult rsrcResult = deSearchName.FindOne();

The last step is set true or false on a boolean variable and pass that back the application for processing.

Pitfall: If this method returns true, it means that there is already an AD account with the name the user is trying to create. As a result, the call errors. So this is kind of backwards from what you would normally want from a validator.

if (rsrcResult.Path != null) {

uniqueRGname = false;

}

else {

uniqueRGname = true;

}

}

catch (Exception) {

uniqueRGname = true;

}

}

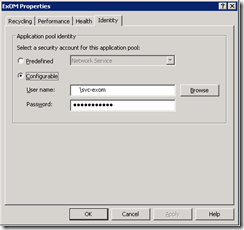

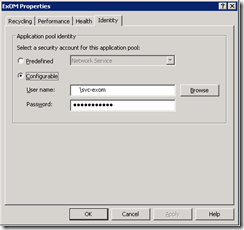

Deployment

There are several pitfalls and some potential security issues related to deployment. Key among the issues is the lack of remoting in PowerShell v1. PowerShell v1, in combination with Exchange 2007 uses direct authentication against the domain to ensure the user has the rights required to execute a command. When calling PowerShell from a web application, the web server uses the application pool identify settings as the context under which is passes the application request. This is problematic for us, because we don't want to give administrative level rights to Exchange to the built-in accounts predefined by the application pool. By default the web server uses the Network Service as it's "run-as" account.

Pitfall: When in development, the built-in web server used for debugging with Visual Studio hides this problem if you are logged on with credentials that have Exchange Administrative rights.

To further explain the problem, the authentication path for this application goes something like this:

1. The user authenticates to the web server

2. The user submits some data to the server that calls a PowerShell command.

3. The Web Server uses its application pool identity settings and passes the command to to Exchange.

4. The command fails because the standard application pool identity is the built-in Network Service which does not have rights to Exchange.

Remoting, which is available in PowerShell v2 can solve this problem by executing under the credentials of the logged on user, over-riding the application pool identity settings. However, with this application was developed using PowerShell v1 and, as a result, required me to finesse the security settings. I did this by creating an Exchange administrative proxy account that I used on the application pool.

I also ensured that no impersonation was happening in the web.config file. This is a major security risk because the credentials are placed in clear-text in the web.config file.

Conclusion

So there you have it. The foundation for a simple application that can manage Exchange using PowerShell without distributing the Exchange Console, PowerShell, or the Exchange Shell. There are tons of other possibilities, and once you have this foundation down, you'll be in a great position to get really creative.

![clip_image002[9] clip_image002[9]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjn19kiZAGCkq8t1NqIl5_WZRttKxunFwSzjufKKBSBHJVUr6hfHooVG0GkEcHcRNN_LP9aqRlCB5xk6ScRi_8ttB9nlCvpTy2c_Nb4jmfpErRakn41uCdKXMX9ek5rJAmbt_ClFZpPwTQ/?imgmax=800)

![clip_image002[11] clip_image002[11]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgraUwuZ3Ii-91ElViWAPzf231_ZlezFezSxGEKqvvAj-5e4Bef1zfsl7r5WFdhn2aNBRmSIjx9MHIedsnLcgqQhqLFxPkUaKTMsixJZdM_RXtNnoY9jazPiOxj6U0XRCtd9gG94_SPROk/?imgmax=800)

![clip_image002[13] clip_image002[13]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhPcXUo7PRElO6TuNuJw_0zWXQdkVy0zHAf8EX88W1pQy3itWvBQcG8LsodWttEpSH7rjYoUZyfPRk7v3Y5tEu8SKbs_8NLVao-_UWZ4eWjb1AL83YFsMzT0aSJzJjJKmsUcRBvs5qb2oA/?imgmax=800)

![clip_image002[15] clip_image002[15]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEj_DVyoeomaBYVSBlwvmr2IlFRU8sZ0Ro4kNQLOXuDMKm3yhhvMy_nOAq4fPSv3GdFB1dhbVA00k9i43DE1AUzS0xPuRWKvMm5nqlV6WIX_eVEsQyPEiv5j5FbGuZutK7_ph1kdg2K96gs/?imgmax=800)

![clip_image002[17] clip_image002[17]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEib0Yk7JlKiVWt3Ml8FAVELDI1S6CUDf2ALu4i3I3BceBvzzVoUC9bxVtQLhxmbsND-RYu57E0AJOu3Z-47xWUrZ3hN8R9ljYDGmR8qcH6I6hM_BQYoSHFOTeP0Ey5oFrSct6GflAQcCNU/?imgmax=800)

![clip_image002[19] clip_image002[19]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiTrKzwMFeYEJ0opmUj39AV_Ux1KWkSjc4DWNjY4B4jOn-p6BvSmukQ2oIemNV9KZvSizXQN6oacWn5fDl1s5Jy3eEB7tL8n9XeGnuYdXfByL24nIwVS0capsCWrmZ1mewnVrAMpKuCkMY/?imgmax=800)

![clip_image002[21] clip_image002[21]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEi1T1sR94Gy-AcgBMU3gpW6wisLosAPeauwsLRTcFYlR7Hxjzi6PRBMuMLDQgvzOF-0G4wu3T4b8QbBfAiUKw_fBjPyAZipT2G7R9AIMVFAgHInwYP_cYTdAI5EwEdg2oaali1fR5IyzZQ/?imgmax=800)

![clip_image002[23] clip_image002[23]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhmlBBhL4u_zxxmqDC3gVCReiZ0WVSYxUzLBQtDWj5xq9YN1dJxYq-qfrJFEl6Yul6T07ZFkGFxOIXet9oG54u5FywUaGo0c_-U2Zv2k_NHtbafEG_renvB8YMAUWL7jCHk04aiMVJ1JF4/?imgmax=800)

![clip_image002[25] clip_image002[25]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhZHLxzLaZVM2VSPs5qJbc0vvGn64C0-bd_8X9yxGsH4hfaS65geO3aoIJAig527IyK_iJfRmMVLPwIJxv3kkcXZB1QjIiuH0iPxFPLiexXFWWJ6M_H8WSQVLeDCmN7HFAEjW_ZEvIlAgg/?imgmax=800)

![clip_image002[29] clip_image002[29]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiDqTlTgcvTcgLZhnGlnI7GmnuD-eiPH-mqv2oyis9UAhwrCP0T4fXZdmhExXZZDpKWikdGMup8PQ_ak-Z5Arto-PzMb_k0XMGX3iOy1rzBmCZqsMtBT62FxtwAm-cGkQQwSa7Py21utVk/?imgmax=800)

![clip_image002[31] clip_image002[31]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEguDW1CxEdAhZZem8Mme9wCqnLOqw655OdhBLzmZaE0zzGYqkgidykCk0mdC39LSxREO6Y2UvefbCERNqZwcBx0vrMt6E_xk0MAAXargNzlAle9K4hbc-fqDkvMYd9mgQge08BPMYU7hiw/?imgmax=800)

![clip_image002[33] clip_image002[33]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiO3F5gWKMGjbnSHQfUIiB3MitlLKZqEw1FndRXFfMUgW-NztHRCXlKVypkzOa3K3HvpH39pMs7amK9IN97vMIzITuwKCAnIx39m6qq3MBUD2co6A_lZvN8GYIkgllnaSIzr4alxrn6Ct4/?imgmax=800)